Introduction

While developing websites, you may often run into a situation where you have an external service which will notify you of some event via a webhook. In my case, I was using Stripe, and wanted to test my webhook ingestion. The usual course of actions in this case was to find the relevant webhook, copy and paste the body into something like Postman, and manually debug the code. However, this can be quite a chore and doesn't really test the ingestion script.

I wanted an external service which would receive all of the webhooks, which I could then download and replay locally in my enviornment. There are many commerical solutions that do this.

One example is Ngrok. While Ngrok solves the problem, I did not want to pay for it in order to get a stable URL, and I wanted to be able to collect the results of the webhook, and download them when I was ready to receive them.

Enter AWS Lambda, SQS, and a couple Python scripts.

TLDR

View the code on Github

Overview

Our goal is to have an externally facing URL which can receive a webhook. It will then push the body of the received webhook into a queue to be processed when we are ready. A Python script will download the data, and submit it to our application.

The SQS Queue

SQS is a service which simply queues up work. It will hold the data until something comes and asks for it, or the data are pushed to an internet accessible service. Since our local environment is not remotely accessible, we will use the "Polling" method, where our service occasionally asks the queue if there is any work available, and if there is, our code will process it.

In order to set up a new queue, log into AWS and search for SQS, or Click Here.

At the top right, click "Create Queue".

Here, need to set a name, and pick the type of queue. If the order of events is important to you, you can choose a FIFO (First In First Out) style queue. For my purposes, this is less important since best-effort ordering is sufficient for the volume of web requests I will receive in this demo. Be sure to choose what is right for your project, as you will need to recreate your queue if you decide to change this later.

Finally, give your queue a name. Click "Create Queue" to finish the setup of the queue object.

Copy the "URL", "Name", and "ARN" field on the queue overview, as we will need these later.

The Lambda Function

We will use lambda to accept the incoming webhook, and push the data onto the queue.

Lambda allows us to deploy a small piece of code which will run on Amazon's servers. We will only be billed when this code is actually executing, saving us some money. Additionally, we can quickly copy and paste some code and get it deployed quickly.

Find the Lambda section on AWS, or Click Here

Click the "Create Function" button in the top right. We will use the "Author from scratch" button, and give the function a name. For the "Runtime" section, select Python 3.7. Finally, hit "Create Function". This wil ltake you to a designer page.

We want this code to execute when a web request comes in, so click on "Add trigger" on the left side of the designer

Click Here to Expand

Click Here to Expand

Here, select "API Gateway" from the menu. Select "HTTP API" for the API Type, and "Open" for the security policy. This will allow our function to be executed via an HTTP request to the generated URL.

Permissions

Our Lambda function needs permission to access the SQS Queue. To do this, we need to add a new IAM Policy to the role created for the Lambda function. Click on the Permissions tab, then click the link under "Role Name"

We want to attach a policy to this role. Because this is a one-off policy, we can use the "Add Inline Policy" button to attach our policy.

Click on the JSON tab, and add the following permissions, being sure to include the ARN of your SQS queue.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "CbkTutorial1",

"Effect": "Allow",

"Action": [

"sqs:DeleteMessage",

"sqs:GetQueueUrl",

"sqs:ChangeMessageVisibility",

"sqs:DeleteMessageBatch",

"sqs:SendMessageBatch",

"sqs:PurgeQueue",

"sqs:DeleteQueue",

"sqs:SendMessage",

"sqs:GetQueueAttributes",

"sqs:CreateQueue",

"sqs:ChangeMessageVisibilityBatch",

"sqs:SetQueueAttributes"

],

"Resource": "your:sqs:arn:goes:here"

},

{

"Sid": "CbkTutorial2",

"Effect": "Allow",

"Action": "sqs:ListQueues",

"Resource": "*"

}

]

}

Your Lambda function should now have permissions to publish to your SQS queue.

Back to Lambda

Now we need to configure some code to run inside our Lambda function. The goal of this is to simply take the JSON included in the POST body, and publish it to the SQS queue. Here is some simple code to do that

import json

import boto3

import os

# Get the service resource

sqs = boto3.resource('sqs')

queue_name = os.environ['SQS_QUEUE']

queue_url = os.environ['SQS_URL']

# Get the queue

queue = sqs.get_queue_by_name(QueueName=queue_name)

def lambda_handler(event, context):

# Send message to SQS:

response = queue.send_message(

QueueUrl=queue_url,

MessageBody=json.dumps(json.loads(event['body']))

)

return {

'statusCode': 200,

'body': 'OK'

}

Copy and paste this into your "Function Code" section.

You'll notice we have two environment variables. Next, we will want to configure those. Scroll down on the edit page to the "Enviornment Variables" section, click Edit, and add SQS_QUEUE with the Name of your queue, and SQS_URL with the URL of your SQS Queue

Finally, going back to the Lambda function, click "Deploy". If you now click on the "API Gateway" of the designer, and expand the details, you can get the URL of the function.

Test the function

Let's submit some JSON to the URL. If all goes well, it should end up in our SQS queue. I am using Postman for this, but you can use anything you choose to submit a request to the URL.

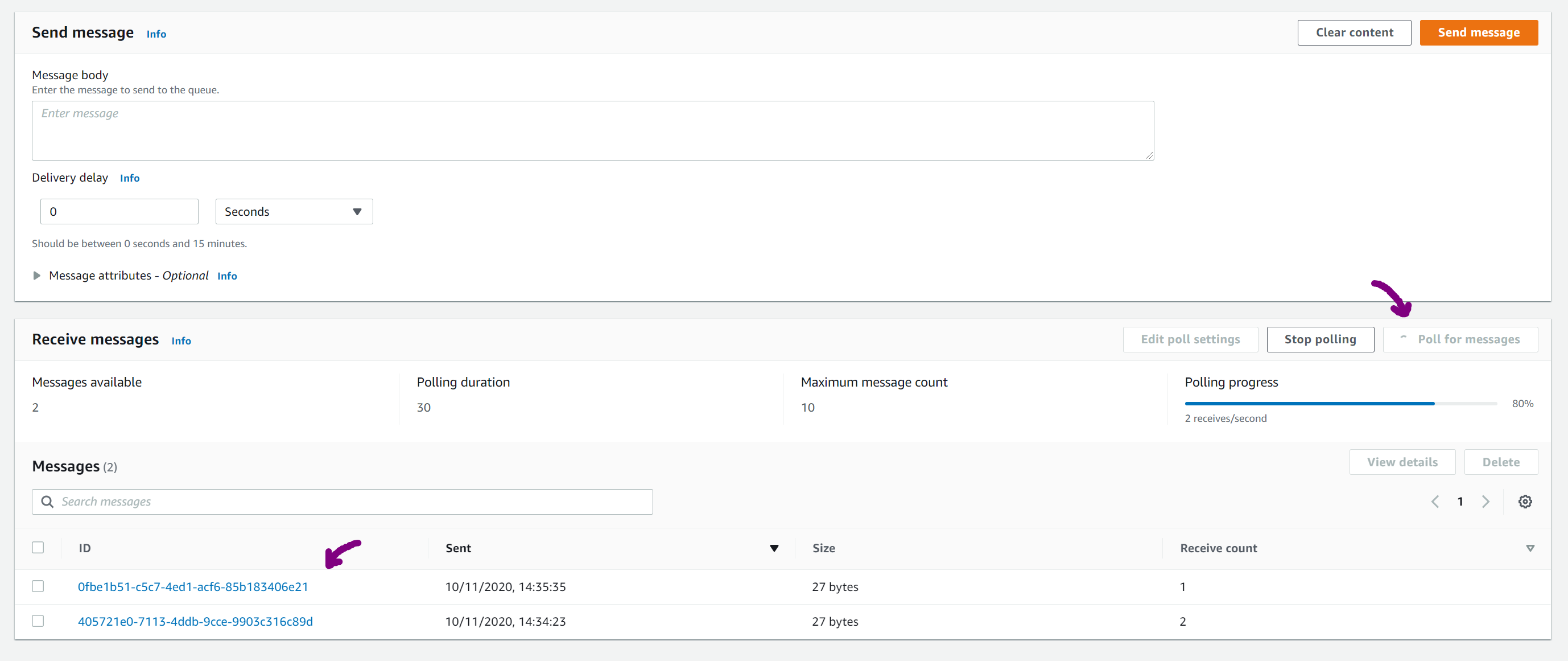

Now go to your SQS queue, and "Send and receive messages" button, and then the "Poll for messages" button. You should see a message show up with the body of the request.

Opening the message and clicking on the "Body" tab should reveal our message

Now we have an internet exposed URL which will push some data into a queue for us. You can add this URL to whatever application you intend to receive webhooks or information from. Next we will write a client to download that to our computer and submit it to a local web server.

AWS login for the client

Finally, we need to give the client a login to AWS, and access to our SQS queue.

Let's add a policy for the client. Go to the IAM "Policies" section (or Click Here), and create a new policy.

Click on the JSON tab. Like the Lambda function, paste the following policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "CbkTutorialClient1",

"Effect": "Allow",

"Action": "sqs:ListQueues",

"Resource": "*"

},

{

"Sid": "CbkTutorialClient2",

"Effect": "Allow",

"Action": [

"sqs:DeleteMessage",

"sqs:GetQueueUrl",

"sqs:ChangeMessageVisibility",

"sqs:SendMessageBatch",

"sqs:ReceiveMessage",

"sqs:SendMessage",

"sqs:GetQueueAttributes",

"sqs:ListQueueTags",

"sqs:ListDeadLetterSourceQueues",

"sqs:DeleteMessageBatch",

"sqs:PurgeQueue",

"sqs:DeleteQueue",

"sqs:CreateQueue",

"sqs:ChangeMessageVisibilityBatch",

"sqs:SetQueueAttributes"

],

"Resource": "your:sqs:arn:goes:here"

}

]

}

Click "Review Policy" and give it a name, then create the policy.

Go over to IAM in AWS, and go to the "Users" section(or Click Here) and click "Add User".

Give the user a name, and select "Programmatic Access"

On the "Set Permissions" window, select "Attach existing policies directly", and find the policy we just created. Click "Next: Tags", "Next: Review", and "Create User".

This will take you to a page which contains a download for a .csv, an access key ID, and a secret access key. These are the values we will use in our client to authenticate to AWS. You'll want to copy these, as you will not be able to retrieve these again in the future.

The Client

Alright, so moving things locally, we need a client to check this queue, and submit the body of the request to some arbitrary URL. I will post the overview of the code, however, it may be better to view this project on Github.

Click here to go to the Github repository for the client

This project is slightly more complex than it needs to be. I use this as a basis for a lot of local work. You can certainly make this less complex. In short, this will use the following environment variables in order to occasionally check the queue, and submit the request to some URL on your computer or inside your network.

requirements.txt:

boto3==1.9.144

botocore==1.12.253

certifi==2020.6.20

chardet==3.0.4

docutils==0.15.2

idna==2.10

jmespath==0.10.0

python-dateutil==2.8.1

python-dotenv==0.10.1

requests==2.24.0

s3transfer==0.2.1

six==1.15.0

urllib3==1.25.10

.env file:

DESTINATION_URL=

SQS_QUEUE=

POLL_DELAY=1

AWS_ACCESS_KEY_ID=

AWS_SECRET_ACCESS_KEY=

AWS_DEFAULT_REGION=

consume_sqs.py

import boto3

from dotenv import load_dotenv

import time

import json

import requests

import datetime

import os

load_dotenv()

class SqsListener:

def __init__(self, queue_name):

self.queue_name = queue_name

def get_queue(self):

sqs = boto3.resource('sqs')

return sqs.get_queue_by_name(QueueName=self.queue_name)

def get_messages(self):

return self.get_queue().receive_messages()

def process_messages(self, with_class=None):

for message in self.get_messages():

if with_class is not None:

processor = with_class(message)

if processor.validate():

if processor.execute() is False:

processor.fail()

self.delete_message(message)

def delete_message(self, message):

message.delete()

class SqsProcessor:

def __init__(self, message):

try:

self.raw_message = message

self.parsed_message = self.deserialize(message.body)

except:

self.raw_message = ''

self.parsed_message = ''

def deserialize(self, body):

return json.loads(body)

def validate(self):

return True

def execute(self):

return True

def fail(self):

pass

class LocalWebsitePassThrough(SqsProcessor):

def validate(self):

return True

def execute(self):

received_time = datetime.datetime.now().strftime("%I:%M%p")

request_type = self.parsed_message.get('type')

try:

response = requests.post(os.getenv('DESTINATION_URL'), None, self.parsed_message)

print('[' + received_time + '] Request Type - ' + str(request_type) + ' --> Response: ' + str(response.content))

except Exception as e:

print('[' + received_time + '] Request Type - ' + str(request_type) + ' --> Error: ' + str(e))

if __name__ == '__main__':

while True:

listener = SqsListener(os.getenv('SQS_QUEUE'))

listener.process_messages(LocalWebsitePassThrough)

time.sleep(float(os.getenv('POLL_DELAY')))

Please view the GitHub page for instructions if you'd like to run this code as-is.

Conclusion

We've added a lambda function, and an SQS queue. We gave the lambda function permission to publish to the queue. We've exposed the lambda function to the internet via a static URL. Finally, we've written a client to download information from the queue and submit it to our local website.

We should now have a fully functional system. We can add this to Stripe, or other services, and when we are ready, pull down a stream of webhooks which will get submitted to our local website. While this does cost money to operate, the cost is minimal. We've reduced the overhead caused by Lambda by minimizing the amount of work the lambda function is processing, and because we are only charged for Lambda while the function is running, this cost is negligible.

Please let me know if you have any questions or comments about this article on Twitter or in the comments section.

About Me

I am a developer who is currently living in Ho Chi Minh City, Vietnam. I work with Laravel, VueJS, React, and other technologies in order to provide software to companies. Formerly a Microsoft Dynamics consultant.